Auditing

“Relying solely on the hardware random number generator which is using an implementation sealed inside a chip which is impossible to audit is a BAD idea.” - Theodore Ts’o (/dev/random Linux node creator).

Or, how can we verify for ourselves that a TRNG does what They say it does?

“The validation of an entropy source presents many challenges. No other part of an RBG is so dependent on the technological and environmental details of an implementation. At the same time, the proper operation of the entropy source is essential to the security of an RBG.” - National Institute of Standards and Technology.

Even if you believe that the venerable /dev/random is truly Kolmogorov random, it can block and is fairly slow. Perhaps insufficient for much one time pad material, so if that is your bent you’ll have to go to the market. There are many ready built commercial devices that might be considered for your TRNG, but be warned. How do you know (verifiably) that the number sequence being produced is not predictable by any state actor or otherwise? Can they be audited and proven secure cryptographically? Just what exactly is inside those packages of black epoxy and metal? Consider the three following examples of typical commercial TRNGs:-

ID Quantique's TRNG as a complex PCIe card.

Encapsulated TrueRNGv3 USB sized device.

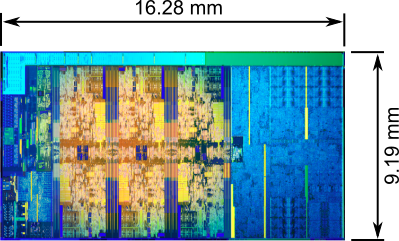

Intel i7 (Coffee Lake) CPU featuring RDRAND/RDSEED instructions.

Intel i7 CPU die with over 1 billion transistors across 11 layers.

We have a printed circuit board with a shielded enclosure and closed source FPGA, a sealed USB device and a preeetty complex microprocessor, with it’s inside view of 11 layers. All purport to produce true random numbers of the highest quality, and at (perhaps unbelievably) high rates. We therefore issue the following challenge:-

Prove that the output from any black box TRNG is not just some variant of the encryption:-

$$ \text{E}_{k \oplus id} (x) $$

where $ k $ = some shadowy agency’s key, $ id $ = a device identification/serial number and $ x $ is some counter and/or time. Perhaps using AES, or some other CSPRNG? Some form of NIST 800-90A HMAC_DRBG? It can’t be mathematically disproved by any analysis of the output data.

Tamper resistance which is keenly marketed as a positive feature of these devices, becomes counter productive in terms of verifiability and independent audit. We are left with no option but to trust the manufacturer’s brand and integrity. What can a user do otherwise? Forcing open/delidding a hardware device risks damaging it, and anyway all of the important operations are soft ones in code embedded within very highly integrated chips.

The Core i* processors are a case in point. Even if the die was exposed, a contemporary microprocessor is a multilayered silicon fabrication. It is technically impossible to identify the parts of the architecture responsible for implementing RDRAND/RDSEED instructions, never mind reverse engineering it for operational confirmation. Unfortunately for transparency, such a need for trust then collides with realpolitik. And even if raw entropy was available, is it actually used or is it all disinformation and a very deep and scary rabbit hole? Tamper resistance and realpolitik can also merge and emerge under the pretext of protecting everyone’s intellectual property: The DMCA and its Chilling Effects on Research. Auditing/analysing a commercial TRNG can land you in jail.

Reproducibility is one of the fundamental tenets of the scientific method. It is the ability to independently reproduce experimental results using a similar methodology to the initial experiment. It has debunked many sensational findings. The philosopher Karl Popper noted that “non-reproducible single occurrences are of no significance to science”. Nor to secure and auditable cryptography. Devices (such as security modules) and chips using certain cryptographic primitives/constructions, and by extension pseudo random number generators, can be verified as correct through the use of standardised test vectors. For example, AES, SHA-x and HMAC_DRBG test vectors are readily available.

The inability to audit modern circuity is ironically not lost on governments either. The recent AES competition stipulated the need for providing Monte Carlo test vectors. They drew upon requirements from NBS Special Publication 500-20, Validating the Correctness of Hardware Implementations of the NBS Data Encryption Standard:-

“Since the test set is known to all, an additional series of tests is performed using pseudo-random data to verify that the device has not been designed just to pass the test set. In addition a successful series of Monte Carlo tests give some assurance that an anomalous combination of inputs does not exist that would cause the device to hang or otherwise malfunction for reasons not directly due to the implementation of the algorithm. While the purpose of the DES test set is to insure that the commercial device performs the DES algorithm accurately, the Monte Carlo test is needed to provide assurance that the commercial device was not built expressly to satisfy the announced tests.”

So the US government doesn’t trust companies to not cheat either. Glad it’s not just us then!

A TRNG is entirely different. No such standard output is possible for a TRNG by it’s very nature. We are therefore left only with statistical analyses, visual inspection and hope that we’re not being sold a ‘cold fusion’ type TRNG. There is a well meaning video presentation about auditability by Benedikt Stockebrand here.

If you are going to make something auditable, then don’t do it half heartedly with token efforts. Do it with gusto and stick it right into people’s faces. The following are B-52 bombers that have been decommissioned at Davis–Monthan Air Force Base as part of the 1991 START Treaty. They are parked like this to allow independent auditing by Russian satellites:-

Decommissioned B-52 bombers arranged for Russian satellite inspection.

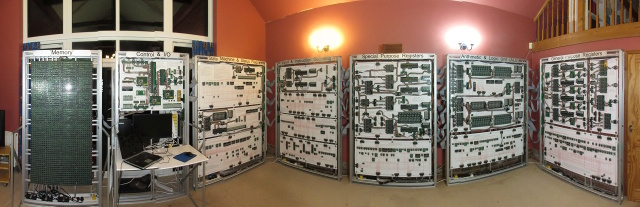

Or build it large like the 7 foot tall, 104 pound, DiceOMatic random number generator. No slight of hand there. Or an organic entropy harvester utilising physical motion, sound and light. Silly/art? Perhaps a little, but it shares the same concept with academic research for using mobile phone motion instead of a bat. Or go even larger, with the room sized £40,000+ Megaprocessor which looks like:-

Showing how a CPU works internally.

The very serious principle underlying the above examples is that you have to go to extraordinary lengths sometimes to convince people of some things. The Russians didn’t just naively ‘trust’ the Americans to decommission. With strong cryptography still regarded as a munition, should we be different, and just trust the manufacturer’s too? The above examples went to great lengths because they believed that the stakes were high. The production of secure, unpredictable and Kolmogorov random entropy is no exception.

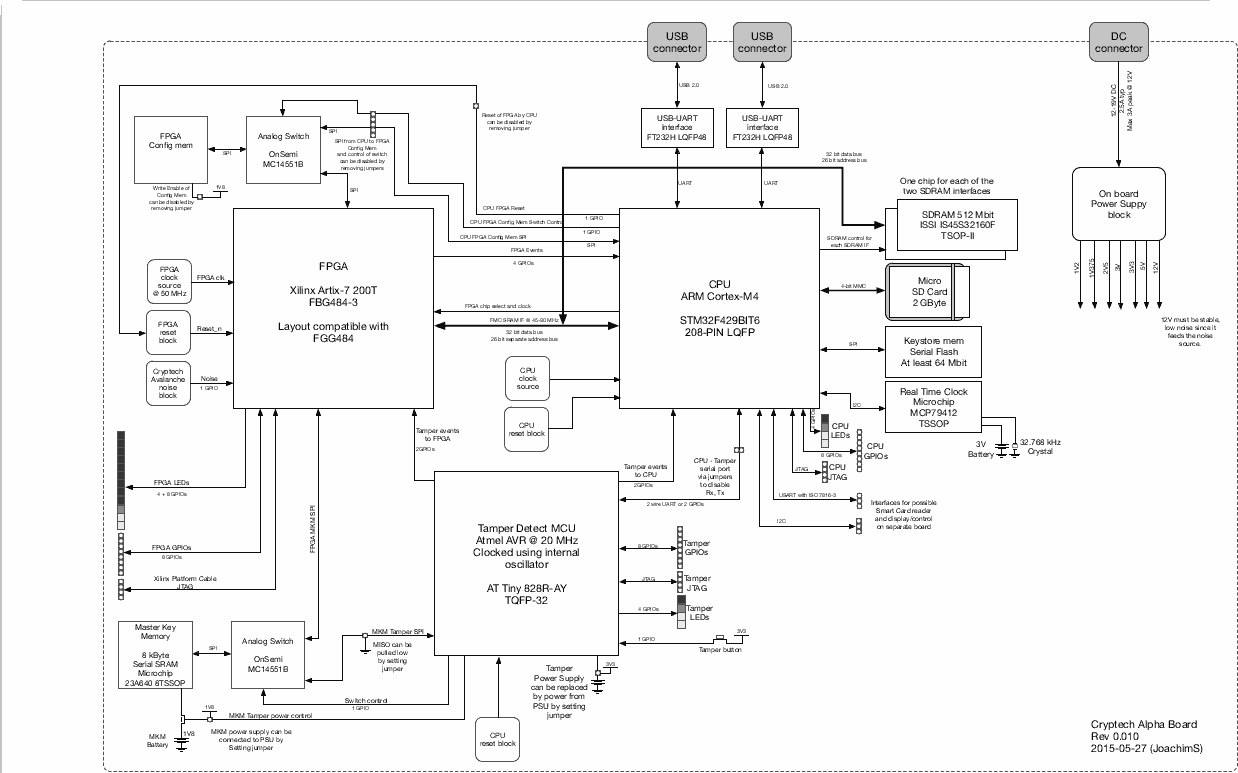

There is a laudable but flawed attempt at an auditable open source/open hardware cryptographic engine @ https://cryptech.is/. They say it “meets the needs of high assurance”, yet this is the architecture diagram:-

The allegedly auditable Cryptech cryptographic engine.

The circuit features two microcontrollers and an FPGA chip, plus numerous support chips and glue logic. All surface mounted. Somewhere in there (at 9 o’clock) is the avalanche based entropy source that presumably acts as a TRNG. Simple and easily auditable? Perhaps not.

Likewise the BitBabbler TRNG. Another attempt at auditing but this time with technobabble and no schematic, but instead concepts like “Spooky math at a distance” and “Folding space and time”. Huh? There is also no access to the raw entropy. The generation rate can’t be measured, so is (output length) < (entropy generated) to maintain information theoretic security? If not it’s just a fancy CSPRNG or even worse a PRNG. This article is not intended as criticism targeted specifically at BitBabbler. It’s just a clear and relevant example of the many failed attempts at an auditable TRNG. We believe that such a device suitable for audit is fundamentally very difficult to design as it then conflicts with usability issues for the end user.

So. Buy a commercial TRNG and hope that it works as a good quality TRNG. Build an entropy source and know that it works as an entropy source. Then do what you will with the entropy…